Ceph – an Overview

With the rapid market adoption of SDS (Software Defined Storage) it is only fair to discuss the much talked about open source SDS Ceph, what it is and how it could be used to provide an SDS solution today.

What is Ceph (can be pronounced sef or kef)?

Ceph is an Open Source Distributed storage solution that is highly scalable supporting both scale-up and scale-out using economical commodity hardware. Ceph can help transform IT infrastructure and the ability to manage large amounts of data. Should an organisation require multiple storage interfaces like object, block and file system to access application data then Ceph maybe a good fit going forward.

Architecture

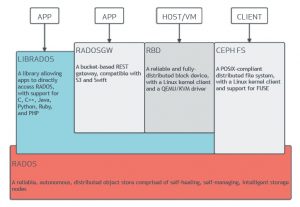

The foundation of Ceph is based on RADOS (Reliable Autonomic Distributed Object Store) providing the multiple storage interfaces in a single unified storage cluster while supporting flexibility, high reliability and ease of management.

The Ceph RADOS backend converts data into objects and stores them in a distributed manner, the Ceph stores are accessible to clients via:

- CephFS (a standard Linux filesystem) s a POSIX-compliant filesystem that uses a Ceph Storage Cluster to store its data

- Since CephFS has come full circle it is now viewed as an enterprise ready solution with the latest release code of Ceph named Luminous, in prior releases CephFS was not regarded as enterprise ready

- RDB (a Linux block device driver) Ceph block storage device that mounts like a physical storage drive for use by both physical and virtual systems. Using block device interfaces makes a virtual block device an ideal candidate to interact with Ceph.

- RADOSGW (RADOS Gateway) This interface is built on top of librados and provides applications with a RESTful gateway to the Ceph Storage Cluster supporting both S3 (Amazon RESTful API) and Swift (OpenStack Swift API)

- LIBRADOS – The Ceph librados software libraries enable applications written in C, C++, Java, Python, PHP, and several others, to access Ceph’s object storage system using native APIs

What makes up a Ceph Storage Cluster?

Ceph storage cluster accommodates large numbers of Ceph nodes for scalability, fault-tolerance, and performance. Each node is based on commodity hardware and uses intelligent Ceph daemons that communicate with each other to:

- Store and retrieve data

- Replicate data

- Monitor and report on cluster health

- Redistribute data dynamically

- Ensure data integrity

- Recover from failures

A Ceph cluster requires at least one Ceph Monitor, one Ceph Manger and one Ceph OSD, a Ceph Metadata Server is an extra requirement when using CephFS

- Monitors: A Ceph Monitor maintains maps of the cluster state, including the monitor map, manager map, the OSD map, and the CRUSH map. These maps are critical cluster state required for Ceph daemons to coordinate with each other. Monitors are also responsible for managing authentication between daemons and clients. At least three monitors are normally required for redundancy and high availability.

- Managers: A Ceph Manager daemon is responsible for keeping track of runtime metrics and the current state of the Ceph cluster, including storage utilization, current performance metrics, and system load. The Ceph Manager daemons also host python-based plugins to manage and expose Ceph cluster information, including a web-based dashboard and REST API. At least two managers are normally required for high availability.

- Ceph OSDs: A Ceph OSD (object storage daemon) stores data, handles data replication, recovery, rebalancing, and provides some monitoring information to Ceph Monitors and Managers by checking other Ceph OSD Daemons for a heartbeat. At least 3 Ceph OSDs are normally required for redundancy and high availability.

- MDSs: A Ceph Metadata Server stores metadata on behalf of the Ceph Filesystem. Ceph Metadata Servers allow POSIX file system users to execute basic commands without placing a burden on the Ceph Storage Cluster.

Ceph Storage Clusters can be sized for different performance levels by designing the performance capabilities of the servers and storage type used in the nodes that make up the cluster.

Storage infrastructure is undergoing tremendous change, particularly as organizations deploy infrastructure to support big data and private clouds. Traditional scale-up arrays are limited in scalability, and complexity can compromise cost-effectiveness. In contrast, scale-out storage infrastructure based on clustered storage servers has emerged as a way to deploy cost-effective and manageable storage at scale, with Ceph among the leading solutions now may be the time to look at Ceph as a possible storage solution.

The process that Ceph uses is quite complicated and will be discussed in a future blog.

Ref www.redhat.com

Ref www.ceph.com